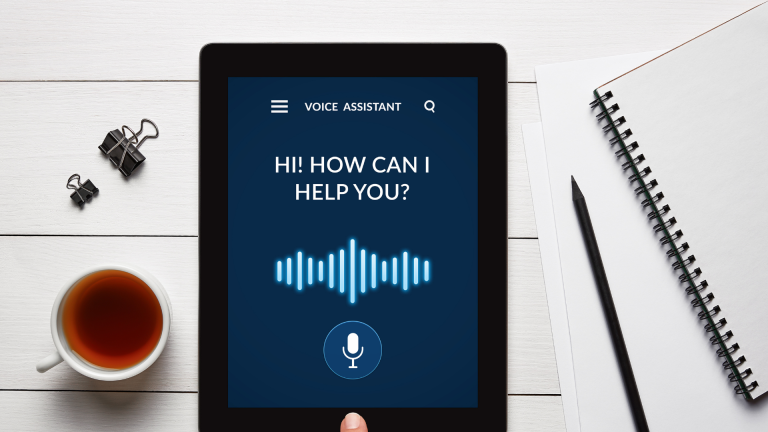

In a recent conversation with a voice expert, I found myself saying, “Voice-first doesn’t mean voice-only,” and was surprised to find it resonated with her. It’s becoming increasingly clear that the future is multi-modal, and voice alone will not be the answer. Some brands are struggling with the concept of voice-first for all user experiences because they seem to think that signals a commitment to voice-only. But that’s simply not true. The strength of a voice-first strategy lies in offering users more options so they can choose the mode of interaction that delivers the best experience in that time, location, and context.

Most companies that have mobile-optimized websites, mobile apps, or customer care centers that deliver great service are wondering why they should prioritize voice user interfaces (VUI). The reality is that they should be enhancing all the channels they have with voice, not replacing other modes of interaction. Voice experiences, like any other UX, have been created to enhance experiences by offering greater convenience, functionality, and hands-free accessibility.

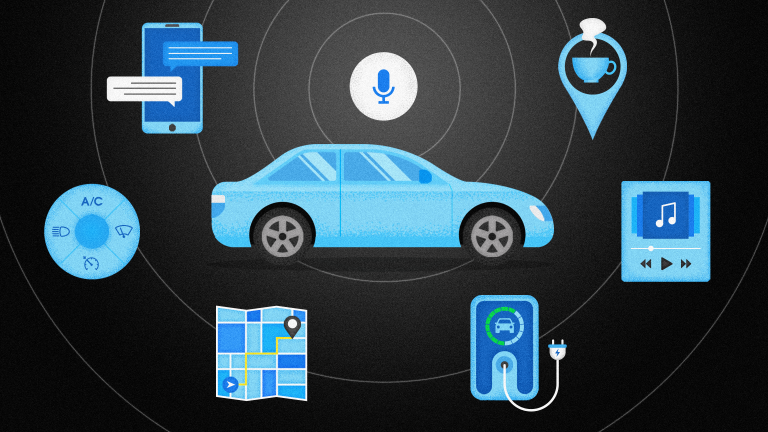

Voice AI as part of multi-modal experiences

In many use cases, the voice interface can be used to supplement other modes of interaction including visual, gesture, and touch, or to create greater efficiencies in customer service and sales. Voice assistants can also help democratize product or app use by providing more accessibility to older adults, people with visual or tactile limitations, and children.

Considering that there are over 110 million virtual assistant users in the United States, 2 in 5 adults use voice search once daily, and 64% of consumers use voice commands while driving, it’s hard to imagine a brand that’s not thinking about a voice solution.

Instead of talking about the benefits of voice alone, brands should be talking about how voice can be a component to extend their product functionality and enhance customer experiences when used in combination with other modes of interaction, including:

- Voice and touchscreen

- Voice and glance/gesture

- Voice and proximity/location

- Voice and icons/sounds