by Karen Scates

Keri Roberts, host of Inside Voice Podcast, recently interviewed two of our engineers working on the Houndify Voice AI platform, Scott Halvstad and Kyle Halvstad. Scott is a software engineer and Kyle is a technical program manager here at Soundhound Inc. The interview focused on the trends, challenges, and best practices of creating custom voice experiences for the automotive market.

This conversation followed a previous episode where Mihai Antonescu, product manager at Mercedes-Benz Research and Development, North America, talked to Keri about “Creating Voice Experiences for Mercedes,” powered by our Houndify Voice AI. Keri was interested in finding out more about the technology behind the voice-enabled MBUX infotainment system and the general trends in voice for the automotive industry—this interview provided the perfect follow-up. Here is a recap of that conversation. You can hear the full podcast here.

Q: Can you talk about some of the common elements car manufacturers need to consider when designing for voice user interfaces in the car?

A: I think for the in-car use case, a lot of people talk about safety because that’s kind of the straightforward thing. You want something that’s hands free, but it’s not the whole picture. You also want to make an interface that feels fluid, easy, and powerful. So it’s not just hands-free, but you also don’t need your mind to be focused on the cognitive burden of adjusting settings and calling people and things like that.

You want your mind to be free to pay attention to the road as well as your hands. So there’s kind of a set of common elements that can get you close to that. I think that they fit into a few categories. So the first one that is really, really important, it needs to be easy to discover what features are available and actually use those features in a flexible way.

The importance of fluid integration

So there are lots of different pieces that add up to that fluid and discoverable experience for the customer. The first one is that it needs to integrate very well with the car’s features, like having helpful prompts and dialogues, answering the question, “How do I use this thing?” That has to happen both in the software and in the voice interface itself. So, it should be an ever present companion with the rest of the features of the car instead of being siloed off on its own.

The other major components are obviously a good voice interface. You need to be able to recognize long, complex utterances and do things quickly for the user—that’s very much on the voice interface side, make sure that works well. You don’t want to say, “Find restaurants, but exclude Italian” and get a return listing of Italian restaurants, especially in an in-car voice interface, because that leads the user to look at the screen when they get incorrect results.

Looking toward the future of voice AI in-car

I also think one of the biggest challenges with cars is designing for the future. It’s very important that you design them to last 10 to 15 years and in a lot of cases, longer. I think that in cars voice interfaces have connectivity with the cloud and have good software API and platform design that can allow the device and its voice assistant to gain new functionality over time.

So, you want a connection with the cloud and an update ability, but you also want your vehicle to be able to operate without that connection and kind of striking that balance is something that’s pretty challenging. So we actually have an embedded hybrid engine at SoundHound that’s available for manufacturers giving them simultaneous use of an in-car recognizer and a cloud recognizer at the same time—whichever one works best returns the results.

Q: Can you talk about why car manufacturers are choosing to go with a more customized solution versus adding Alexa or Google into their vehicles?

A: I think the first point that we always bring to the table is that it’s very important for companies, especially automotive companies that often are targeting more of a luxury segment of the market, to be able to retain their strong brands. The automotive industry is obviously very brand centric. The companies behind platforms like Alexa and Google have a different goal with forming relationships with their end users—the car manufacturers. In contrast to that, we want to empower our users to provide a branded and consistent interface with their users.

There are also some technical reasons. The Alexa and Google assistants are self-contained brands. Google, for example, has their own mapping data. We realized that car manufacturers already have agreements in place to use data from other providers say, Tom, Tom, or HERE and it’s important for manufacturers to retain consistency in their experience. If a user talks with your voice assistant and it delivers different results from typing on the touch screen, that’s confusing and potentially damaging to your brand.

Q: Do drivers have to choose between a custom option or is there a way to get customized voice interfaces and the familiarity of other voice assistance in the same vehicles?

A: Ultimately I don’t really think the idea of talking with a single voice assistant is the right way to think about it. So you don’t really ask your barber or your hairdresser about how to fix your car. For instance, when we talk with human experts about things, they have specific roles and we don’t see there as being one single entity, like Alexa, that knows all the information about everything. Instead, you assemble a group of experts to help run your life. One way to think about that is that you have a plurality of assistants. You have a car that maybe talks to all of them, but I think that’s kind of confusing because they’re not really well separated as far as what their roles and responsibilities are.

So, really the vision for our platform—which we talk about as Collective AI—you can have independent domains or programs that know how to understand different things and they can be independent, but help each other out. So the single assistant, single data source model, I don’t think makes a lot of sense.

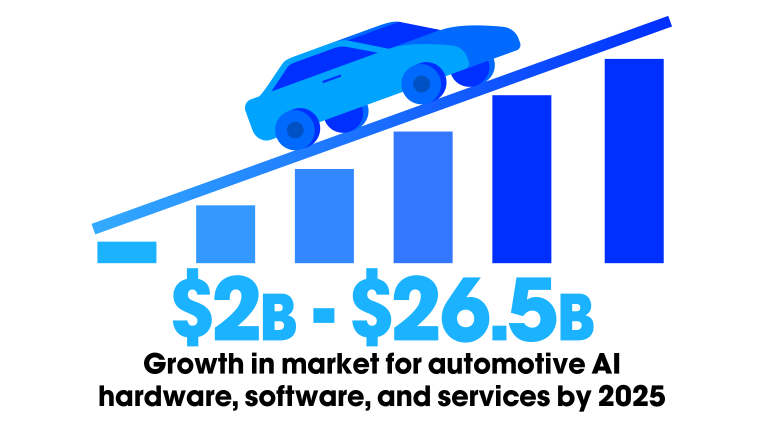

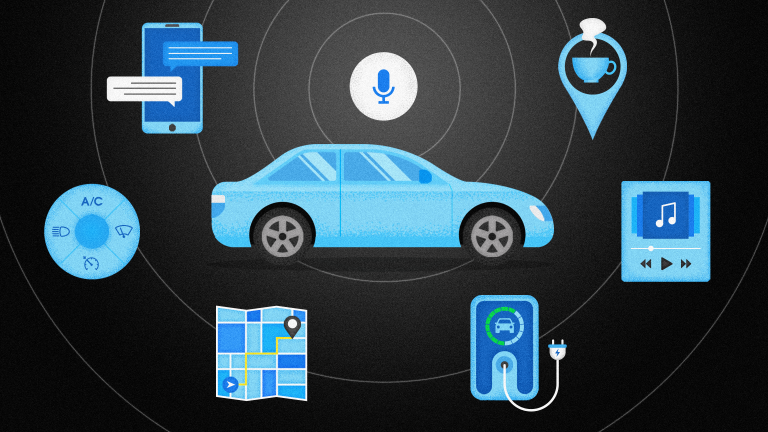

And also, how do you see that evolving in the future? As of today, the primary use cases are obviously navigation for the automotive segment and also making phone calls, starting conversations with other people. But I think that there are a lot of other use cases that passengers and drivers should expect to be able to do, but are a little bit clunky in today’s world,

Obviously, this will get better and more conversational as the technology evolves and theoretically it could be seamless. But right now, I think that there is sort of marginal support for more complex use cases. Look at something like navigation or making calls, neither of those things requires connectivity to perform. General knowledge, queries, weather, and things like sports scores that take advantage of the power of the cloud are going to start to be a little bit more prominent. As people start to trust their cars, they’ll want to do more and expect the voice assistants to do more.

As we start to get better at solving the general natural language understanding problem, we will unlock these different ways of applying the technology that makes it seem more like you’re having a useful conversation instead of just giving civil orders.

Q. Do you have any suggestions for car manufacturers to ensure their voice assistants are able to meet future consumer demands?

A. It’s really important to allow things to evolve over time so that the voice interface remains relevant. So having some cloud connectivity, I think is one aspect of a successful strategy that will remain successful over time as the rapidly changing voice assistant gets better and better in the next few years. And if you don’t think big and imagine the possibilities of using it and plot a path to get there,then you will be behind on what competitors are doing.

When we think about voice interfaces. it’s not just a feature on the checklist that you’re going to sell a luxury car. You should dream about it as being a way to truly delight your users.

Q. What would you say are some things automakers should be looking out for in order to help them see it as the right partner?

A. We’re probably a little biased on this, but what I would say you should look for something where you have a win, win situation. It really needs to have good alignment between the goals of the voice platform and the goals of the product that it’s being integrated into. One of the obvious factors for the automotive use case is that lots of car companies have customers in the global marketplace and many different markets around the world. So understanding how the partnership scales, what the future potential is and not going for a voice platform that only supports what they want to do right now, but thinking about things that haven’t been done before, because they weren’t possible before, that delight is really where this technology is going. So choosing a platform that is going in the same direction and is able to scale with the partnership over time. Look for a technology that allows you to grow and be extensible and have a global presence.

Q: Can you talk about how natural language understanding is helping us truly enhance the voice assistants of today?

A: The way that I think about it is that natural language technology is what will give us the operating system of the future. Right now, applications that we use on computers and websites are designed in a relatively primitive way.

I think that adding true natural language understanding to a voice assistant allows you to escape that rigid framework of supported commands and really approach a more fluid and flexible and discoverable experience for users.

Our computers have a very limited language right now, which is just the buttons that you can click on, but extend that with natural language, it just inherently dramatically expands the universe that’s possible. I think natural language and the extensible architecture that we’ve put together is key to being a useful technology in the future.

Q: Voice-first isn’t voice only. Can you explain what that means from an in-car point of view?

A:I think that voice-first is a trend that is particularly exciting, especially working in the voice industry. But really, I think the idea here is that we shouldn’t be thinking about voice as the only way to activate features, but rather as the augmentation of existing features, a superpower. It shouldn’t make things harder or clunkier, and it shouldn’t even change how you do things. It should make it easier. It shouldn’t provide an alternate way to do things that are already possible, like turn on the right blinker or something like that you would want to do.

It’s not even just voice or something else, where you have multiple options, but you can actually employ voice AND something else. You can point to a building and say, “Hey, what is that?” I think that the interfaces of the future will definitely incorporate voice as kind of an important component, but it’s not always the best tool for the job. While voice can add some additional information, you might be gesturing at something in the car as well.