According to Antonio Damasio, author of, “Descartes’ Error,” humans cannot separate their feelings from their thoughts. His research has revealed that human emotion is so intertwined with reason that one cannot make a decision without both.

In the future, we may be interacting with voice assistants in every aspect of our lives—as interfaces into machines, mobile apps, services, and as companions. In this voice-enabled world, we will likely expect those voice entities to adhere to societal norms by combining their logic with emotion—as we do.

As emotionally intelligent entities, voice assistants will be able to recognize emotions and react appropriately, providing the sort of guidance and advice you might get from another person. In some situations, this level of interaction could be as dramatic as providing life-saving behavior or as simple as providing the voice cues needed to help diffuse an emotional situation.

Emotion detection in the car

Imagine a scenario where a driver is frustrated by the traffic, driving in a new area, and asking for directions to avoid further delays. The tone and tenor of that person’s voice could be detected as frustrated and confused by the voice assistant. In response, further driving directions can be given in a slow, calm, moderated voice.

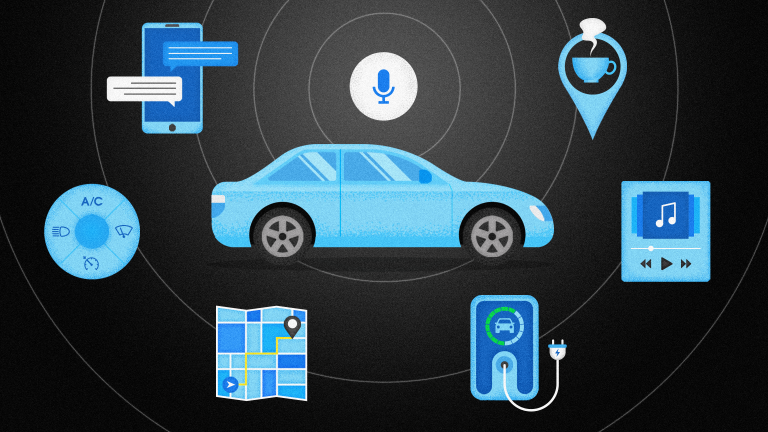

When the in-car voice assistant detects a potentially dangerous driving situation based on the driver’s state of mind, it can provide proactive suggestions. Lowering the temperature in the car, turning on the driver side chair massage, dimming the dashboard lights, or turning on some soothing music may help to lower the driver’s stress levels.

In some cases, driving can simply be a boring, lonely experience. Currently, a voice assistant that can act as the co-pilot—proving navigation, destination information, and in-car controls makes driving more convenient and safer through hands-free control.

If that same voice assistant has emotional intelligence, it can act as the front-seat passenger, providing companionship and alleviating boredom. Now, drivers on long road trips have a human-like person to talk to while they drive—joking around and sharing information. Carrying on a conversation with another entity adds a level of interactivity to in-car entertainment that could dramatically decrease fatigue and daydreaming—and improve the safety of driving alone.