Since the introduction of voice assistants for the home, the topic of privacy and security have been barriers to adoption. Recently, there has been a lot more conversation around the topic of privacy, especially in Europe, and people are very concerned about data getting propagated to the cloud.

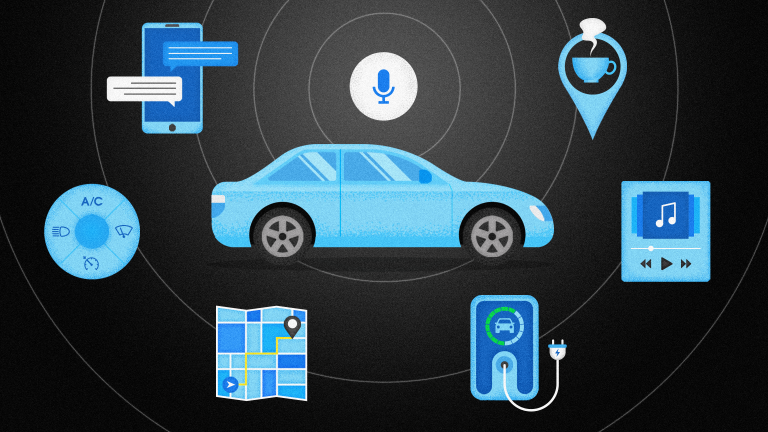

For applications where privacy is a concern, offline speech recognition can help allay concerns as no internet connection is needed for the voice assistant to operate. Again, you can think of voice user interfaces for smart thermostats, smart TVs, and even in the car.

The biggest trade-off for implementing embedded technology is the robustness and breadth of responses available. Although we provide a good rate of accuracy with our embedded technology without consuming too much memory, most embedded systems don’t have enough computing power to provide answers to complex and compound queries.

Embedded voice AI in noisy environments

For an embedded solution to be adapted to noisy environments, it’s best to know ahead of time what kind of noise the voice assistant is going to experience. For example, will it be used in public spaces, shops, train stations, or will it be in a home next to a washing machine or in a car?

No matter the environment, we can create the custom acoustic models and work with the manufacturer to ensure their microphones know how to handle noisy environments.

That’s not to say that a particular microphone is required for voice AI to work. While it’s beneficial for accuracy to have a noise-free input audio stream, in many cases manufacturers often have already chosen their microphone provider. We are able to handle all kinds of noise environments including car, airplane and cafeteria noise, and we are willing to work with all of them to provide the best speech recognition experience possible.

Challenges of implementing an embedded or hybrid voice solution

Sometimes the greatest challenges don’t lie in the choice of connectivity options. Often, we find the greatest challenges come from preconceived notions of what voice AI is and what it can do.

Surprisingly, a lot of the clients I meet are still stuck on things from the past. They still think that people can’t talk to their computer in a natural human voice and they try to phrase the queries to match short keywords—”deep, hot, cold, gray.” They don’t try to talk to a computer naturally like saying, “Please make me a hot Earl Grey tea”. Instead they say “Tea, earl grey, hot”, as though Star Trek is the only language model for speaking with voice assistants.

I think people got used to the old models and speech recognition systems and they haven’t modified their expectations based on newer technology that’s currently available.

In call center applications for example, callers are still asked to choose from a menu of voiced selections, such as “Please press one in order to be connected to a representative.” or “Please press two in order to hear the balance of your credit card,” and so on. Surprisingly, I still see a lot of designs coming from that place, trying to break things into very segmented intents without taking into account that human speech can be much more complicated than that.

Instead, developers should be thinking about designing voice assistants to respond to their customers in a much more human way. For example, “Please tell me my current credit card balance and when my next payment is due,” or “Close all the windows, turn on the air conditioning, and tune the radio up to 88.5 FM.”

The answer to many natural language queries does not come from a single source. Instead, the data is gathered from across domains to deliver a single response. I think it’s important for developers to start thinking about designing the system from the point of view of talking to a fellow human, instead of initiating responses based on single keywords.

Afterall, when someone gets into a taxi or Uber, they don’t start by telling their driver, “Navigate to New York,” and the driver doesn’t respond, “OK, and now please tell me your street name,” and then, “Now please tell me your house number.” The passenger simply wants to be taken to a specific destination and should be able to express that in one statement and the driver already knows which city they’re in.